Turn your notebooks into production-ready applications

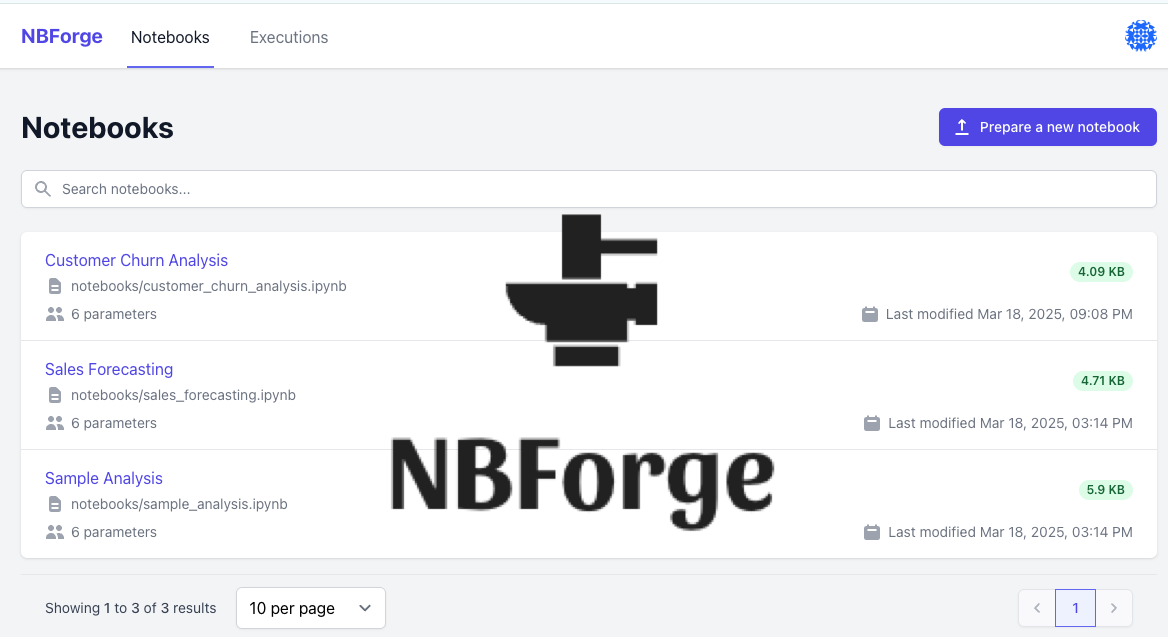

NBForge is an open-source platform that allows you to run Jupyter notebooks with parameterized inputs, data pipeline integration, and a clean UI for your stakeholders.

NBForge is an open-source platform that allows you to run Jupyter notebooks with parameterized inputs, data pipeline integration, and a clean UI for your stakeholders.

Identify your challenge and discover how NBForge can help

Tired of generating the same reports over and over for stakeholders who can't run your code?

Struggling to integrate your Jupyter notebooks with production systems and orchestration tools?

Need a better way to handle long-running notebooks with proper monitoring and notifications?

Frustrated with stale notebook copies floating around your team with no central source of truth?

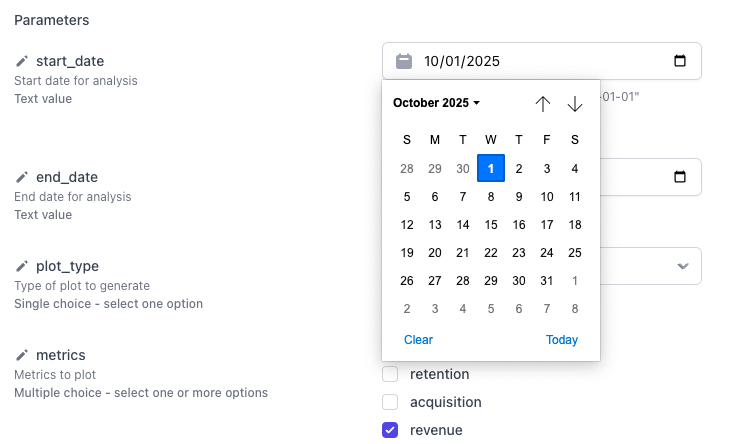

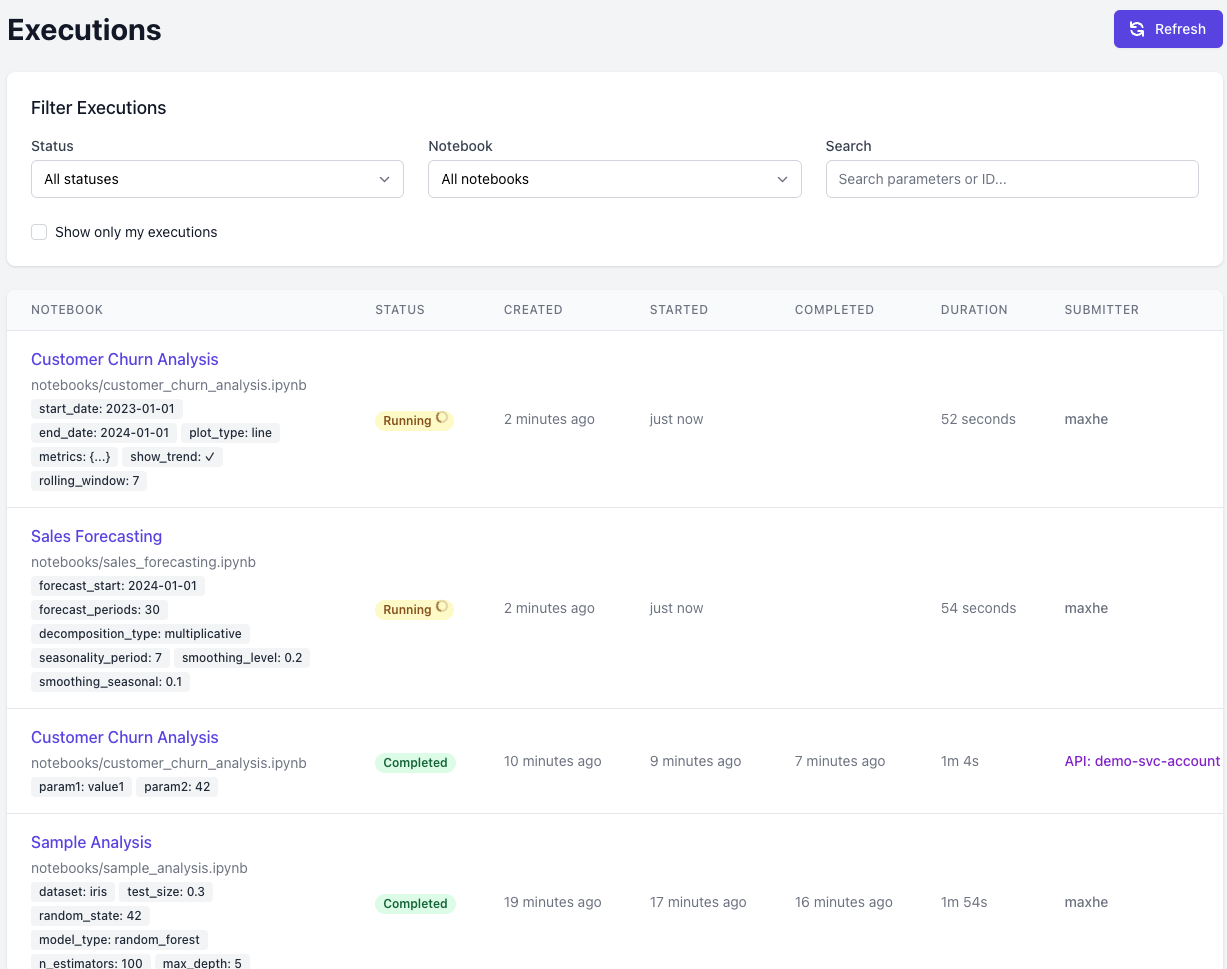

Stop being the bottleneck for running reports. NBForge creates user-friendly forms from your notebook parameters, allowing business stakeholders to run reports themselves with different inputs.

Execute notebooks with blazing speed using containerized environments that provide consistent, scalable, and reliable results every time.

{

"resources": {

"cpu_milli": 2000,

"memory_mib": 4096

}

}Select specific Python versions and packages for each notebook, ensuring perfect compatibility with your code requirements and dependencies.

# library requirements at the notebook level

"python_version": "3.10",

"requirements": {

"pandas": "==2.0.*",

"matplotlib": ">=3.4.0",

"scikit-learn": ">=1.4.0"

}Automatically generate intuitive parameter forms from notebook metadata, allowing non-technical users to run notebooks with different inputs.

Seamlessly integrate with Airflow and other orchestration tools to incorporate notebooks into your data pipelines and automated workflows.

# Airflow DAG example with NBForge integration

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime, timedelta

import requests

def run_nbforge_notebook(**context):

api_url = "https://your-nbforge.com/api/v1/notebooks/execute"

service_account_key = os.getenv("NBFORGE_SERVICE_ACCOUNT_KEY")

# Get parameters from Airflow context

execution_date = context['execution_date']

# Configure notebook execution

payload = {

"notebook_path": "finance/monthly_report.ipynb",

"parameters": {

"report_month": execution_date.strftime('%Y-%m'),

"include_charts": True

}

}

# Execute notebook

response = requests.post(

api_url,

headers={

"Authorization": f"Bearer {service_account_key}",

"Content-Type": "application/json"

},

json=payload

)

# Store execution ID for downstream tasks

execution_id = response.json()["execution_id"]

context['ti'].xcom_push(key='execution_id', value=execution_id)

return execution_id

# Define DAG

with DAG(

'monthly_financial_report',

schedule_interval='@monthly',

start_date=datetime(2023, 1, 1),

catchup=False

) as dag:

run_report = PythonOperator(

task_id='run_financial_report',

python_callable=run_nbforge_notebook,

provide_context=True

)Deploy on major cloud providers (AWS, GCP) or on-premises, with Kubernetes orchestration for optimal resource utilization and scalability.

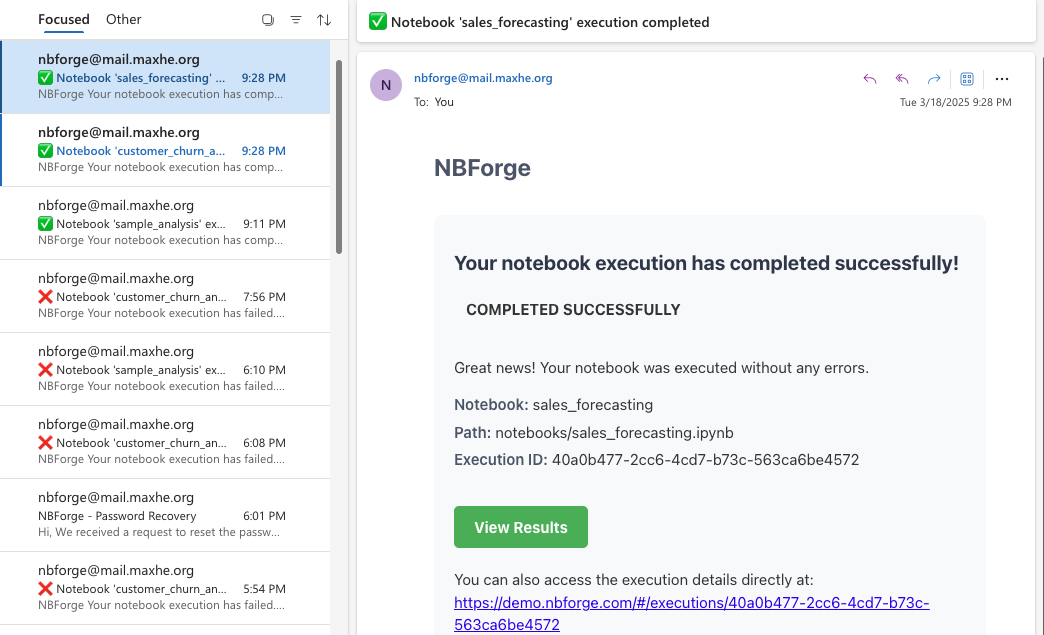

Receive timely email notifications when notebook executions complete or fail, allowing you to focus on other tasks while long-running processes execute.

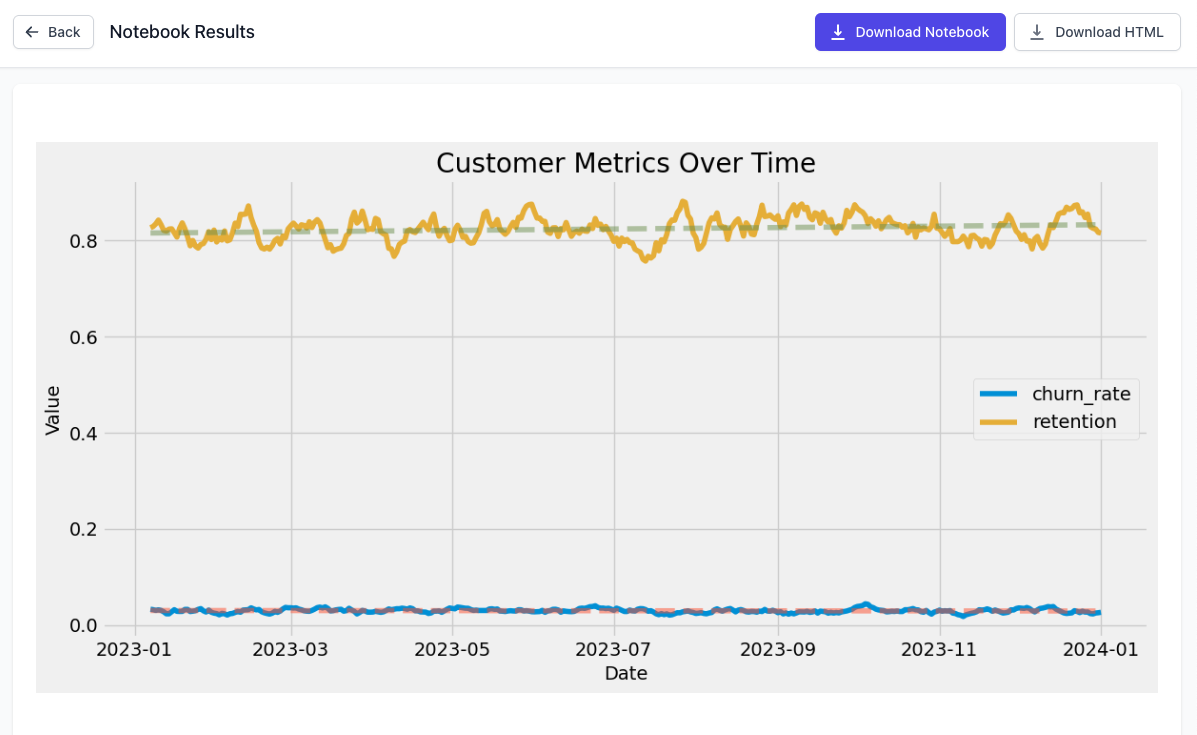

Generate beautiful HTML reports from notebook outputs that can be easily shared with stakeholders, complete with interactive visualizations and formatted results.

Eliminate manual notebook modifications that lead to inconsistent results and errors. Every execution follows the same validated process, ensuring reliability and trust in your outputs.

Hi there! I created NBForge and would love to chat about how it can work for your data science team. If you have any questions about getting it set up, integrating it with what you already have, or tweaking it to fit your needs, just reach out!

Transform how your organization uses Jupyter notebooks